By Kristinn R. Thórisson

On November 19th, 2024 the US-China Economic & Security Review Commission produced a report to Congress, recommending it initiate a “Manhattan Project-like program” to develop artificial general intelligence (AGI). This is not only laughable, it is madness. Let me explain why. It took 70 years for contemporary generative artificial intelligence (Gen-AI) technologies to mature. Countless scientific questions have yet to be answered about how human intelligence works. To think that AGI is so “just around the corner” that it can be forced into existence by a bit of extra funding reveals a lack of understanding of the issues involved. In the below I compress into 2000 words what has taken me over 40 years to comprehend. Enjoy!

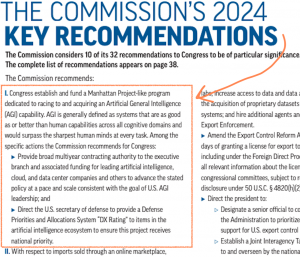

I. Congress establish and fund a Manhattan Project-like program dedicated to racing to and acquiring an Artificial General Intelligence (AGI) capability. AGI is generally defined as systems that are as good as or better than human capabilities across all cognitive domains and would surpass the sharpest human minds at every task. Among the specific actions the Commission recommends for Congress:

▶ Provide broad multiyear contracting authority to the executive branch and associated funding for leading artificial intelligence, cloud, and data center companies and others to advance the stated policy at a pace and scale consistent with the goal of U.S. AGI leadership; and

▶ Direct the U.S. secretary of defense to provide a Defense Priorities and Allocations System “DX Rating” to items in the artificial intelligence ecosystem to ensure this project receives national priority. (USCC 2024 REPORT TO CONGRESS, p.10)

This blurb not only gets the concept of AGI wrong, it reveals a deep misunderstanding about how science-based innovation works. The Manhattan Project was done in the field of physics. In this field, a new theory about nuclear energy had recently been proposed, and verified by a host of trusted scientists. The theory, although often attributed to a single man, was of course the result of a long history of arduous work by generations of academics, as every historian knows who understands the workings of science. Additionally, physics is our oldest and most developed field of science. Sure, funding was needed for making the Manhattan Project happen, but the existence of the prerequisite foundational knowledge on which it rested was surely not due to large business conglomerates wanting to apply nuclear power to their products and services, like the current situation is with AI. For anyone interested in creating a ‘Manhattan Project’ for AGI: Without a proper theory of intelligence, it will never work!

“Artificial intelligence” was founded as a field of research in 1956, with the singular goal of creating machines with intelligence. AI is still is a field of research, with precisely that aim. From the very beginning its goal was to develop systems that — one day — could rival human intelligence. 30 years later, in 1986, the field had still not produced convincing evidence that intelligent machines were on the horizon — or in fact that they even might be a possibility. Rosie the Robot was nowhere to be found and no human world-champion in chess had been beaten by a machine, in spite of the field’s founding fathers having predicted, with some regularity for about two decades, that such an occurrence was imminent. While a foundation was laid in the 1980s for future vacuum cleaning robots, as well as computing machinery that would go on to win in 1997 against the Chess World Champion Gary Kasparov, this would be largely attributed to compute power; a good theory of intelligence was nowhere to be found. The work rested simply and firmly on manual software development methods. In 1983 the President of AAAI wrote that “AI needs more emphasis on basic research” and that it had too much emphasis on applications. His argument is obvious to anyone who understands how big advancements progress on scientific questions are made: Theoretical breakthroughs open the doors to a deeper understanding which in turn enables paradigm shifts. Combinatorial technical improvements will only bring progress a short distance. The truth is that without good theories, little to no progress can be made. “Reaching human level artificial intelligence will require fundamental conceptual advances” said John McCarthy in 1983.[JM83] This is still the case. You might think “but surely, Kristinn, gen-AI technologies have brought us way beyond the state AI was in 1983?” On the key topic of relevance here, that is, intelligence, you’d be wrong.

In the early 2000s, two AI researchers, Dr. Pei Wang and Dr. Ben Goertzel, proposed a workshop to be held at the annual conference of the world’s largest AI society, AAAI, with the theme of general intelligence. AAAI’s leadership turned them down. They tried again a year later. Again they were rejected. Not only that, no other workshops on this topic were held those years, nor the years before. Yet the entire field of AI was originally created for the pursuit of intelligent machines — and its founding fathers clearly saw ‘human-level intelligence’ as an important milestone in this scientific pursuit. These facts seemed to have been forgotten. After some discourse with the AAAI conference organizers, Wang and Goertzel came to the conclusion that they would need to start their own conference to give general intelligence the space for scientific scrutiny it deserved. To make any progress on a challenging scientific topic, it is best to focus on precisely that particular topic. They knew this. They named their conference “Artificial General Intelligence”.

Fast forward to 2024. Everyone now believes that AI is “the future” — somehow — but the technologies on which this argument is based, like ChatGPT and other generative AI approaches, still feel like solutions in search of a problem. Yet, while Gen-AI and related technologies are certainly the product of AI research, which justifies giving calling them “AI,” and they can do things that no other technology can, they just don’t seem very intelligent. Quite the contrary, after extended use it becomes clear that they are pretty dumb. Calling them “artificial” turns out to also be based on a misunderstanding: These systems simply cannot work with out massive amounts of content created by natural intelligence (humans).[ANN]

The idea that got us to this point can be traced back 70 years. In 1943 McCulloch and Pitts proposed an abstracted model of how natural neurons operate.[MP43] The first ‘artificial neural networks,’ SNARC and Perceptron, were created in ’51 and ’57, respectively. Contemporary AI, like large language models, deep neural networks, and other marvels of current AI, work on the same principles. To become useful, early ideas on how to mimic nature with artificial ones took 70 years. Another deep misunderstanding here is this: Contemporary artificial neural networks work nothing like natural neural networks — the gray matter that sits between our ears. They do not really “learn,” in the normal sense of that word, and they can’t be trusted to change after they leave the lab. Neither do they do anything that could be considered “thinking”: Thought does not go straight from ‘input’ to ‘output’ in one fell swoop, and then … nothing. No, contemporary AI isn’t doing anything along the lines of “the sort of stuff that our brains do.” The similarity between these artificial neural networks and natural neural networks is in fact so small that calling it ‘poetic’ would be quite generous.

Back to 2024. ‘Artificial general intelligence’ is neither a household concept nor does it have a widely-accepted definition, not even with experts. Don’t get me wrong, good definitions of general intelligence do exist. Among the best ones is Dr. Pei Wang’s groundbreaking one that separates the concept of intelligence from all other phenomena.[PW19] This definition has been well covered in publications over the past 20 years, yet the AI community has not paid it the attention it deserves (yours truly being a notable exception). Good theories on intelligence, like its definitions, are few and far between — and all of them are patchy. A notable one is “Society of Mind,” published in 1986 by one of the field’s founding fathers,’ Marvin Minsky.[MM86] A scientific theory, to refresh your memory, should explain the mechanisms behind the particular phenomenon its focuses on. An important feature of any scientific theory is that it cover all aspects of the phenomenon it aims to explain, and provides sufficient detail to produce testable hypotheses about it. Most theories of intelligence barely meet these requirements; they are proto-theories at best — lacking in coverage, detail, cohesiveness, and testable predictions. Dr. Wang’s definition has served his research well — it has led to one of the purest and most complete theories of intelligence, meaning that it is more coherent and less patchy than most, as well as a software demonstration of its fundamental ideas. Neither this theory, nor Minsky’s, have caught on in mainstream AI. Nor has the idea that theories are important.

Now the US-China Economic & Security Review Commission has now made a recommendation that a “Manhattan-like program” be put in motion for artificial general intelligence. This proposal is undoubtedly inspired by the tech-giants’ efforts to scale up artificial neural networks. A Manhattan-size project based on little more than the ill-understood technologically morphed version of a grossly simplified notion of how

When alchemists tried to create gold out of other materials in the 1100s, they did so without any good theories of matter. We now know that this pursuit of solid gold was completely impossible, a total waste of time — but this was not known at the time. It could not have been: They did not have coherent theoretical foundations to stand on; no models of how matter is composed, how it behaves, nor did they have access to good engineering principles. No proper theories of energy existed nor what makes one material different from another. Instead, they were completely technology-driven: Just like contemporary AI researchers that look at the many powerful tools in their labs — undeniably impressive — and convince themselves that coupling these with some tinkering and a bit of luck will suffice to produce the magic of general intelligence, alchemists did the same with eye for pure gold. In the 1100s, the scientific method was still not invented and the theoretical limitations of such a technically-based approach was not well understood. Their naïveté is understandable. Today this is different: We know that technology alone can only take us so far on a topic; after a while, the lack of fundamental understanding of the nature of the phenomena in question will stop us. That’s why we continue to fund basic research in academia: Ultimately it pays off to have people dedicate their career to answering difficult questions about the nature of the world. We know they do not rest on methods largely without a theoretical basis. We know that large language models are fed on the side product of intelligent processes: written language. How would anyone think that this will magically reverse-engineer the mechanisms that produce this data? Isn’t that like trying to reduce a tree to its seed by squishing it? Producing a fryer solely from a fried egg? Yes it is.

There are many things that I’m uncertain about. This one is not one of those things. A Manhattan-like project for artificial intelligence in 2024 would be like a Manhattan-like project for alchemy in the 1100s. Being entirely without a proper scientific foundation, AGI at the scale of the Manhattan Project is not only complete folly — the whole enterprise would be a gigantic waste of money. A laughable idea, at best. Sure, some new technology is bound to be derived from it — but none of the results will be according to plan. Instead, the outcome will be a linear and disappointing continuation of the contemporary AI landscape, technologies that are sub-par, unreliable, difficult to comprehend, and untrustworthy. Of course, for the amount of money and effort spent, those involved will have to show something for their effort — something that can mask all the unfulfilled promises. So of course it will be presented as the real deal. We would see all the usual trickery: Subtle and not-so-subtle re-defining of terms, twisting of facts, misdirection, and of course lies, lies and statistics.

But it gets worse.

Should a Manhattan-like project for artificial general intelligence be undertaken in the near future, this will unquestionably draw other nations into the fray. In spite of being sub-par and unpredictable, this technology will find its use in new “intelligent” weapons,[HRW] as unpredictability is every aggressor’s good friend: While the creation of new hospitals, bridges, public transport, and other valuables calls for highly coordinated, well-managed technologies and processes, destruction feeds on chaos. As long as they get their shiny high-speed, self-guided killer robots, warmongers can live with some mistaken killings and failed information campaigns, just like they forgive search engines for showing a few irrelevant results.

As this cold war turns into a drawn-out multi-year effort, the world would see increased imbalance and chaos. AGI will not turn out what was promised: Reliable, manageable, trustworthy automation, based on a deep scientific understanding of how minds work. No, it will be a world where AI is not used for the common good, a world where AI is completely technology-driven, with little or no vision other than to make the multinationals even more powerful and unstoppable.

We need better AI, AI that can benefit all, for the betterment of humankind. This is of course possible.[TM22] To get a better future — one where you can trust advanced automation technologies with your personal information, where it can be relied on to automate tasks we’d rather not do ourselves, that is not solely in the hands of a handful of giant multinational corporations that turn your politicians into string puppets — we must advance the scientific foundations of AI. If you don’t like the direction contemporary AI is taking us and prefer AI technology that can better align with a democratic future, can be tailored to the needs of individuals, gives you direct say in how it behaves, and doesn’t require you sharing sensitive data with companies that answer to different laws in different countries, in short, if we want trustworthy artificial intelligence that really understands us and this world we live in, then we must urge our friends, our CEOs, our politicians, friends in our social networks to look through the contemporary AI hype and argue against a Manhattan-style project for AGI at this time: It is not only premature, it is a recipe for a deeply nefarious future that none of us want.

FURTHER ON THIS TOPIC (added Jan. 11,2025)

- We recommend the lecture Towards a Proper Foundation for Robust Artificial Intelligence, Redux, given by Gary Marcus at the 2024 International Artificial General Intelligence Conference in Seattle, which was chaired by Dr. Kris Thórisson.

- In a recent video by Sabine Hossenfelder, who is a YouTuber and physicist and very interested in AGI, addressed the question “Are We Getting AGI in 2025?”, where she shows a snippet of the above Gary Marcus talk from AGI 2024.

REFERENCES

ACKNOWLEDGMENTS

The author would like to thank G. Carvalho for comments on a draft of this article.