by M. A. Torrent

“Consolidating information power in a few massive platforms can be problematic. Some economists compare misinformation to pollution. Prebunking – or inoculating people before they encounter falsehoods – can significantly bolster defenses. Another potent way to curb disinformation is by targeting its profitability.“

This is the second half of a two-part series on cognitive warfare.

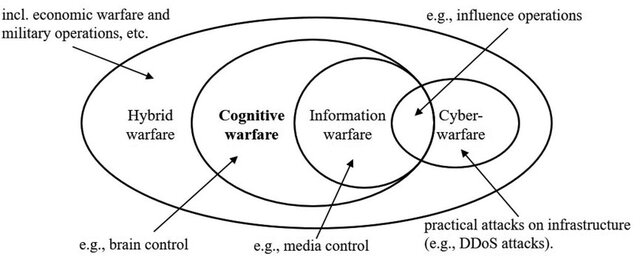

In Part 1 of this article I introduced the problem of cognitive warfare weaving its way into our information ecosystems. Cognitive warfare, in short, is a strategy to influence or manipulate a population’s perceptions, beliefs, and therefore decisions by exploiting psychological vulnerabilities through available media systems, often via misinformation tactics. Fuelled by the interactive dynamic between AI-driven misinformation, cognitive biases and groupthink, the collision between truth and democratic discourse is rapidly becoming louder. Yet it would be an oversimplification to conclude we, the general public, are merely helpless victims. It may still be possible to reclaim and reshape the digital commons to serve our collective intelligence, rather than exploit our collective memories. In this Part 2 we shift our attention toward an array of potential remedies; whether by reforming existing platforms or creating entirely new ones, fighting cognitive warfare in social and mainstream media requires a systemic shift on multiple fronts.

Fact-Checking: Reactive vs. Prebunking

Once a false claim takes hold, corrections immediately face an uphill battle, a phenomenon known as the “continued influence” effect.1 2 Given the speed of information spread across our various information networks, fact-checking therefore often arrives too late. In contrast, pre-bunking – or inoculating people before they encounter falsehoods – can significantly bolster their defenses. In fact, a cross-cultural experiment using the Bad News game showed that even brief exposure to misinformation tactics helped participants more readily identify manipulative content.1 Academic reviews likewise confirm such inoculation’s effectiveness across contexts, from climate myths to vaccine conspiracies.1 2 Thus the scope and scale of such a measure should not be underestimated; misinformation may never cease to exist, but it is up to the reasoning capabilities of the receiving population to give or take power away from it.

Inoculation strategies have already moved beyond the lab: In late 2022, Google’s Jigsaw ran for instance a large-scale prebunking campaign in Eastern Europe to blunt Russian propaganda about Ukrainian refugees, using short videos that warned viewers of manipulative tactics before falsehoods could take root.2 Within two weeks, these clips reached about 25% of Poland’s population and one-third of people in Slovakia and the Czech Republic – resulting in a measurable boost to viewers’ ability to spot disinformation.2 Similarly, during the COVID-19 infodemic, “fake news vaccine” games and tutorials preempted myths, with studies showing that participants who engaged in prebunking were more skeptical of false claims than those who only saw fact-checks afterward.1 Taken together, these trials and field pilots show that prebunking has the potential to curb misinformation more effectively than reactive measures alone. Deploying both those strategies in tandem therefore seems to be optimal.

Countering Disinformation Through Decentralized, Independent Media

Consolidating information power in a few massive platforms can be problematic, having recently prompted many users to seek out decentralized, community-driven spaces as alternatives. Wikipedia stands as a prime example of crowd-curated content resilient to misinformation, owing to its volunteer editor base and transparent sourcing. One study even ranked both Wikipedia and Cofacts – a Taiwanese, messaging-app-based fact-checking platform that crowdsources submissions and debunks – among the most robust tools against online falsehoods.3 Cofacts frequently addresses rumors faster than professional fact-checkers, demonstrating how empowered communities can act as “immune systems” for entire information ecosystems.

Other decentralization efforts also counter manipulation. The Fediverse (e.g., Mastodon) distributes moderation across independent servers, letting communities block disinformation hubs at will.4 Meanwhile, Bluesky – an emerging project initiated by former Twitter leadership – aims to create a similarly decentralized model, where users connect through distinct servers under an open protocol, rather than relying on a single corporate-owned network. On Reddit, volunteer-driven subreddits like r/AskScience or r/AskHistorians strictly enforce verification rules.5 Even fan groups, such as K-pop’s BTS ARMY, have mobilized to debunk rumors.6 Investigative collectives like Bellingcat, operating free from corporate or governmental influence, have exposed state-sponsored disinformation – such as false narratives surrounding the 2014 downing of Malaysia Airlines Flight MH17.7 By sharing transparent methodologies and inviting public tips, these communities rapidly undermine official propaganda, illustrating how community-led moderation and distributed verification dilute the reach of organized falsehoods.

Countering Disinformation Through Financial Friction

Yet another potent way to curb disinformation is by targeting its profitability. Some economists compare misinformation to pollution, advocating Pigouvian taxes on platforms that profit from it 8. Nobel Laureate Paul Romer, for instance, proposes taxing targeted advertising to discourage engagement-driven algorithms that amplify extreme or false content 8. Others suggest using these funds to support public-interest journalism, effectively redirecting disinformation revenue toward constructive initiatives.

In practice, the impact of economic boycotts is unquestionably real. Between 2016 and 2017, the far-right outlet Breitbart lost about 90% of its advertisers after a grassroots group, Sleeping Giants, alerted brands that their ads appeared alongside extremist content.9 10 In 2017, a YouTube ad boycott forced the platform to tighten monetization rules when major companies discovered their ads were running on extremist videos.11 The Stop Hate For Profit campaign similarly pressured Facebook into policy changes regarding hate speech and misinformation.11 Meanwhile, demonetizing repeat misinformers – like removing ad revenue from anti-vaccine channels – cuts off vital funding streams. Watchdogs such as the Global Disinformation Index reinforce this approach by maintaining “do-not-buy” lists of known purveyors. When combined with transparency, decentralization, and pre-bunking, these financial interventions can fortify the information ecosystem against manipulation.

Digital Literacy and Conscious Engagement

Current social platforms often capitalize on psychological susceptibilities in both youths and adults; teenagers, in particular, often occupy a delicate phase of identity formation where social validation, body image concerns, and self-esteem issues can all be amplified and manipulated for commercial gain. Moreover, research indicates that the unrelenting feed of rapidly changing stimuli, characteristic of platforms like Instagram and YouTube among many others, has a strong potential to erode attention span, making it tougher to focus on more complex tasks.12

Prolonged exposure during adolescence, when impulse control and higher-order reasoning are still evolving, can also degrade decision-making over time.13 Currently some estimates place total daily screen time at over 10 hours across devices.14 Over an 80-year life, this can equate to two decades or more spent glued to screens – eclipsing even the ~27 years most people spend sleeping.

If we want to move toward more conscious engagement, experts recommend digital literacy programs that train users to spot emotionally manipulative content as well as the “dark patterns” that emerge from addictive design.14 Psychologists also stress the value of structured “offline” periods, giving both adults and teens a break from incessant notifications and content feeds. On the policy side, proposals include age restrictions on social media, curbing hyper-targeted ads (especially those preying on body-image issues), and requiring friction-based app interventions – such as prompts to pause or daily screen limits. By combining mindful consumption habits with responsible platform design and regulatory oversight, users of all ages could step back from exploitative engagement loops, preserving the mental bandwidth needed for healthier, more purposeful living.

Algorithmic Transparency, Corporate Accountability, and the Limits of Mitigation

Demand for algorithmic transparency and more accountable content moderation have grown in response to rampant misinformation – even as high-profile political figures continue to exploit social media at scale. In the EU, the Digital Services Act (DSA) compels platforms like Meta, Google, TikTok, and X to reveal how their systems function, allowing users to opt out of purely engagement-driven content feeds.16 Meta, for instance, introduced a chronological (algorithm-free) News Feed on Facebook and Instagram,17 16 while large platforms must undergo independent audits and share data with researchers. In the U.S., states like Missouri have pushed for legally enforced user choice in filtering.18 Although framed as consumer protection, these measures may only scratch the surface when well-known political figures, such as Donald Trump, continue to command attention with provocative or verifiably false claims.

Meanwhile, calls for corporate accountability go beyond revealing how algorithms rank posts. The emergence of transparency reports – like Facebook’s Community Standards Enforcement Report – opened the door to data on hate speech, spam, or fake accounts.19 2 Yet, the presence of top tech CEOs at major political events, coupled with subsequent allegations of biased or inconsistent moderation (e.g., Instagram purportedly censoring certain content or boosting right-leaning narratives), underscores how easily platforms can be perceived as complicit. Even after Facebook publicly posted its once-secret rulebook20 and Twitter (now X) open-sourced parts of its recommendation algorithm, whistleblower leaks (e.g., Frances Haugen’s disclosures21) and Congressional hearings continue to reveal behind-the-scenes politics. As a result, many argue that superficial compliance – like offering “algorithmic sunlight” – has done little to curb powerful public figures who repeatedly push falsehoods.

The tension between well-intentioned policy interventions and the enduring, large-scale influence of demagogic voices exemplifies the limits of purely technical or regulatory fixes. Antitrust actions and transparency mandates can compel Big Tech to alter certain practices, but widespread misinformation persists when audiences remain willing to share and believe such content – regardless of what the platform discloses. As some critics note, truly addressing the problem requires structural shifts: consistent enforcement of moderation policies (no matter a figure’s status), credible legal frameworks that penalize deliberate misinformation, and a cultural refusal to enable “alternative facts.”

Ultimately, while algorithmic disclosures, open-data pushes, and public audits mark progress, they often appear “light” compared to the vast network of political, financial, and social forces that continue to exploit online spaces for their own ends.

Footnotes & References

- Prebunking interventions based on the psychological theory of “inoculation” can reduce susceptibility to misinformation across cultures. | HKS Misinformation Review

https://misinforeview.hks.harvard.edu/article/global-vaccination-badnews/ - Disinformation as a weapon of war: the case for prebunking – Friends of Europe

https://www.friendsofeurope.org/insights/disinformation-as-a-weapon-of-war-the-case-for-prebunking/ - Crowdsourced fact-checking fights misinformation in Taiwan | Cornell Chronicle

https://news.cornell.edu/stories/2023/11/crowdsourced-fact-checking-fights-misinformation-taiwan - Content Moderation on Distributed Social Media (PDF) | University of Minnesota Law School

https://scholarship.law.umn.edu/cgi/viewcontent.cgi?article=2040&context=faculty_articles - Countering Disinformation Effectively: An Evidence-Based Policy Guide | Carnegie Endowment

https://carnegieendowment.org/research/2024/01/countering-disinformation-effectively-an-evidence-based-policy-guide?lang=en - Community-based strategies for combating misinformation: Learning from a popular culture fandom | HKS Misinformation Review

https://misinforeview.hks.harvard.edu/article/community-based-strategies-for-combating-misinformation-learning-from-a-popular-culture-fandom/ - Bellingcat: Courageous Journalism Unveiling the Truth Ahead of Britain – New Geopolitics Research Network

https://www.newgeopolitics.org/2023/02/21/bellingcat-courageous-journalism-unveiling-the-truth-ahead-of-britain/ - Misinformation as Information Pollution (arXiv)

https://arxiv.org/html/2306.12466 - Breitbart News threatens Sleeping Giants with a lawsuit – Columbia Journalism Review

https://www.cjr.org/the_new_gatekeepers/breitbart-news-threatens-sleeping-giants-with-a-lawsuit.php - Steve Bannon caught admitting Breitbart lost 90% of advertising after Sleeping Giants campaign – The Independent

https://www.independent.co.uk/news/world/americas/us-politics/steve-bannon-breitbart-boycott-advertising-sleeping-giants-trump-a8854381.html - Leveraging Brands against Disinformation – Items (Social Science Research Council)

https://items.ssrc.org/beyond-disinformation/leveraging-brands-against-disinformation/ - Twenge, J. M. & Campbell, W. K. (2018). “Associations Between Screen Time and Lower Psychological Well-Being Among Children and Adolescents.” Preventive Medicine Reports.

- Lin, L.-Y. et al. (2022). “Mobile Device Usage and Adolescent Impulse Control Development.” Journal of Pediatric Psychology.

- Statista (2023). Average daily time spent with digital media in the U.S.

- American Academy of Pediatrics (2021). Recommendations on media use for children and adolescents.

- A guide to the Digital Services Act, the EU’s new law to rein in Big Tech – AlgorithmWatch

https://algorithmwatch.org/en/dsa-explained/ - DSA: Meta apps are getting chronological feeds in Europe – Silicon Republic

https://www.siliconrepublic.com/business/meta-facebook-instagram-chronological-feed-eu-dsa - Attorney General Bailey Promulgates Regulation Securing Algorithmic Freedom for Social Media Users | Missouri AG Office

https://ago.mo.gov/attorney-general-bailey-promulgates-regulation-securing-algorithmic-freedom-for-social-media-users - Facebook Releases First-Ever Community Standards Enforcement Report | Electronic Frontier Foundation

https://www.eff.org/deeplinks/2018/05/facebook-releases-first-ever-community-standards-enforcement-report - Facebook releases content moderation guidelines – rules long kept secret | The Guardian

https://www.theguardian.com/technology/2018/apr/24/facebook-releases-content-moderation-guidelines-secret-rules - Facebook’s Algorithm Comes Under Scrutiny – Centre for International Governance Innovation

https://www.cigionline.org/articles/facebooks-algorithm-comes-under-scrutiny