By Kristinn R. Thórisson

On November 19th, 2024 the US-China Economic & Security Review Commission produced a report to Congress, recommending it initiate a “Manhattan Project-like program” to develop artificial general intelligence (AGI). This is not only laughable, it is madness. Let me explain why. It took 70 years for contemporary generative artificial intelligence (Gen-AI) technologies to mature. Countless scientific questions have yet to be answered about how human intelligence works. To think that AGI is so “just around the corner” that it can be forced into existence by a bit of extra funding reveals a lack of understanding of the issues involved. In the below I compress into 2000 words what has taken me over 40 years to comprehend. Enjoy!

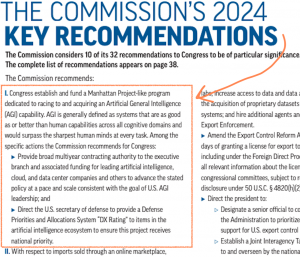

I. Congress establish and fund a Manhattan Project-like program dedicated to racing to and acquiring an Artificial General Intelligence (AGI) capability. AGI is generally defined as systems that are as good as or better than human capabilities across all cognitive domains and would surpass the sharpest human minds at every task. Among the specific actions the Commission recommends for Congress:

▶ Provide broad multiyear contracting authority to the executive branch and associated funding for leading artificial intelligence, cloud, and data center companies and others to advance the stated policy at a pace and scale consistent with the goal of U.S. AGI leadership; and

▶ Direct the U.S. secretary of defense to provide a Defense Priorities and Allocations System “DX Rating” to items in the artificial intelligence ecosystem to ensure this project receives national priority. (USCC 2024 REPORT TO CONGRESS, p.10)

This blurb not only gets the concept of AGI wrong, it reveals a deep misunderstanding about how science-based innovation works. The Manhattan Project was done in the field of physics. In this field, a new theory about nuclear energy had recently been proposed, and verified by a host of trusted scientists. The theory, although often attributed to a single man, was of course the result of a long history of arduous work by generations of academics, as every historian knows who understands the workings of science. Additionally, physics is our oldest and most developed field of science. Sure, funding was needed for making the Manhattan Project happen, but the existence of the prerequisite foundational knowledge on which it rested was surely not due to large business conglomerates wanting to apply nuclear power to their products and services, like the current situation is with AI. For anyone interested in creating a ‘Manhattan Project’ for AGI: Without a proper theory of intelligence, it will never work!

Continue reading Laughable Madness: AGI ‘Manhattan Project’

Proposed by USCC to U.S. Congress