IIIM is working to advance the development of state-of-the-art machine learning techniques to solve hard problems in artificial intelligence research, particularly with respect to scaling reinforcement learning to larger and more complex problems of the kind that will be faced by next-generation learning systems.

Humans learn many things at once. Children learn to walk and talk over a period of time during which they receive feedback for each skill intermittently and with no clearly marked time dedicated to learning only one skill or the other. This is the norm for learning amongst humans. However, to date, reinforcement learning has been limited to single, well-defined tasks arranged sequentially. Machine learning agents can learn to play checkers at champion level, and they can learn to avoid obstacles in simple robot navigation tasks, but the kind of life-long learning needed to enable vastly more flexible agents has been out of reach.

Reinforcement Learning

Reinforcement learning (RL) is a popular method for enabling autonomous agents to learn to perform new tasks using only feedback gathered through interaction with their environment. For many difficult problems in AI, this approach is desirable as we often don’t know how to define the behaviors we want the agents to learn sufficiently well to enable us to program the behaviors directly.

Transfer learning – the ability for agents to recognize similarities between learning tasks and to use skills already learned for one such task to speed acquisition of new and related skills – also plays a critical role in this project and in our abilities to scale reinforcement learning methods to the difficult problems faced in building ever more intelligent machines.

Learning Multiple Diverse Tasks

IIIM is leading the project “Large-Scale Machine Learning for Simultaneous Heterogeneous Tasks” (funding began May, 2012) to begin to address this problem. Current attempts focus only on one isolated task or at most a very small number of closely related tasks, such as robot navigation with a secondary task of keeping the batteries charged. These approaches cannot scale to the more open-ended environments in which great numbers of diverse skills must be acquired concurrently.

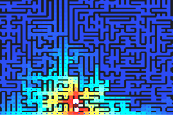

Currently the simulator is able to generate random problems of several different types, as well as spatial navigation problems and mazes. Ongoing work is adding additional random and real-like problem types and using the simulations to evaluate current multi-task learning methods.

Drawing from expertise in the field of multi-objective optimization and search space analysis, IIIM researchers are developing a simulation testbed for evaluation of multi-task learning algorithms and applying this knowledge to better understand how these current algorithms fail as the number of tasks increases. The goal of the project is then to use these insights to develop improved learning algorithms able to handle an order of magnitude or more concurrent and diverse tasks.

In Hackathon 2012 – Iceland, guests from different local startups will introduce short programming challenges and describe various experiences encountered in their area of business. Participants of the event will then be set to solving these challenges during the hacking session. This event is open to students (signup required, see below), hobbyists, professionals, anybody who likes to hack on cool code.

In Hackathon 2012 – Iceland, guests from different local startups will introduce short programming challenges and describe various experiences encountered in their area of business. Participants of the event will then be set to solving these challenges during the hacking session. This event is open to students (signup required, see below), hobbyists, professionals, anybody who likes to hack on cool code.